Hi

I’m Rick Hurst, a professional full-stack developer based in Bristol, UK.

I have over two decades of commercial experience in the web and software industry, ranging from senior roles in busy agencies to freelance consultancy for government agencies, distribution companies and charities.

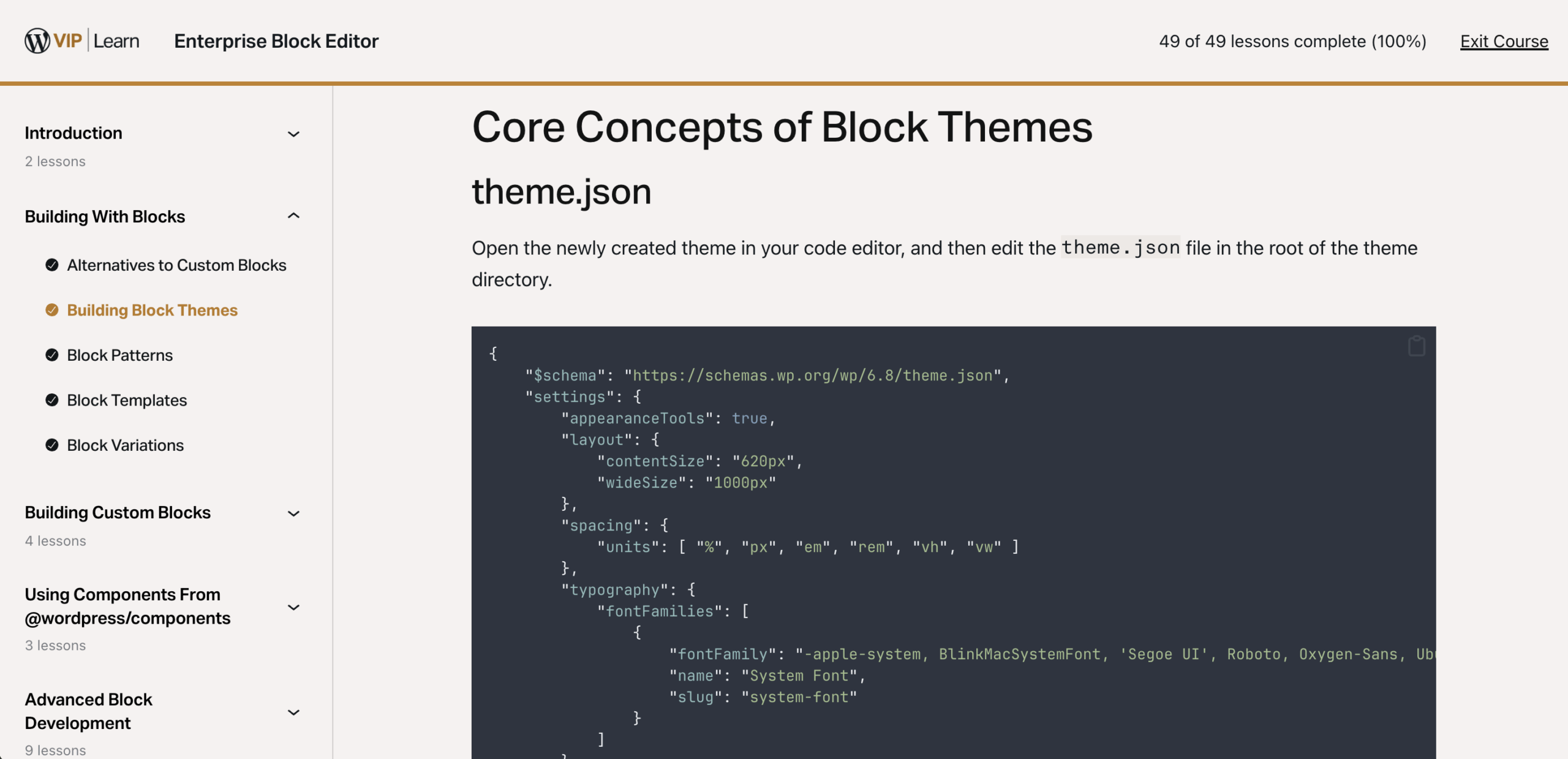

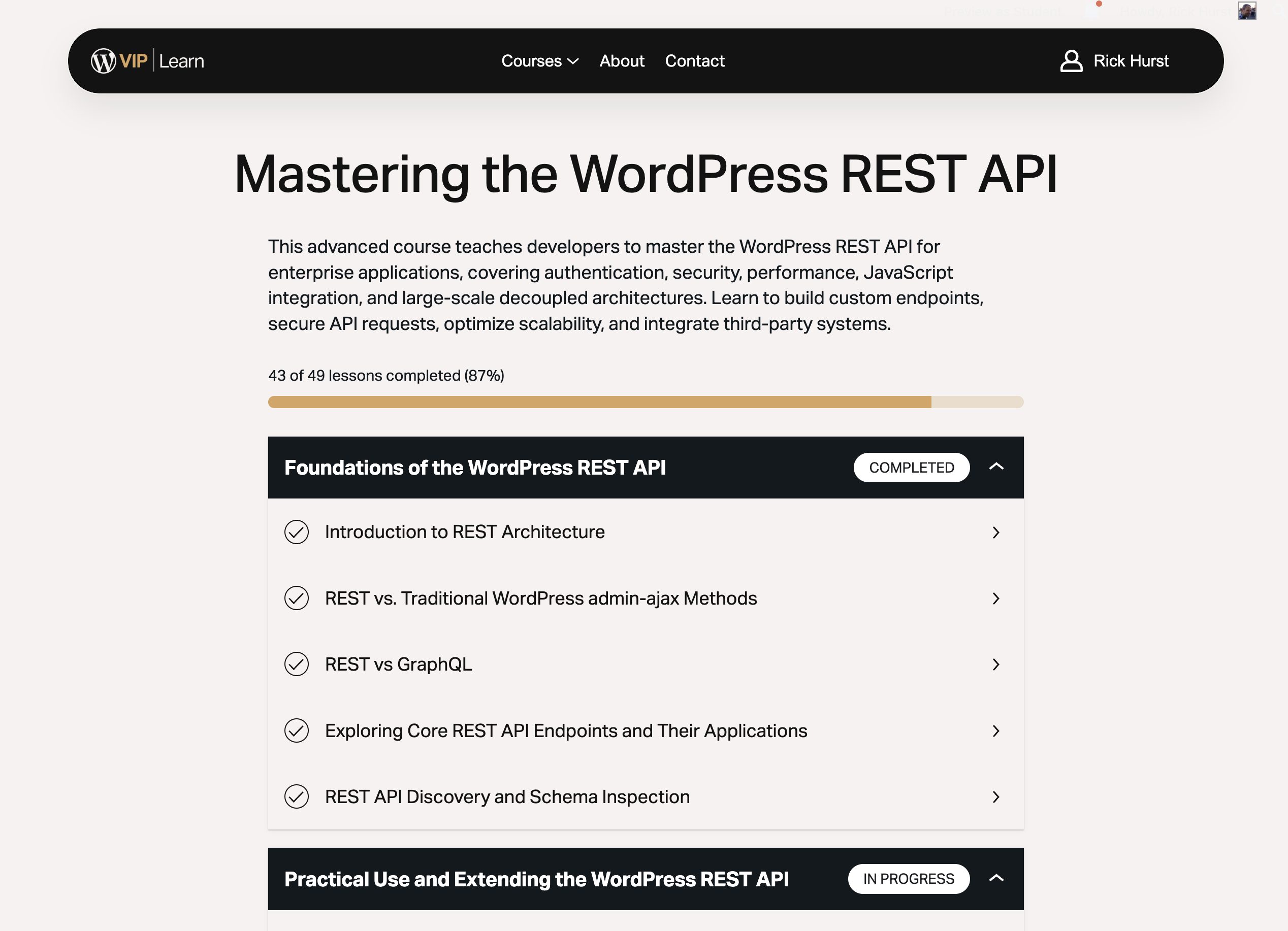

I work full-time at Automattic as a developer for WordPress VIP, helping our customers run WordPress at scale, and creating Courses for Advanced WordPress Developers.

My very neglected blog covers tech ramblings from the past two decades, interspersed with campervan content.