My current tools and tech in 2019

I try to avoid jumping on every new trend which comes along in the web world, especially over the last few years, where things seem to have moved very fast, with javascript frameworks becoming obsolete before i’ve even got round to trying them, but I also need to keep my skills and tech relevant. Here’s what i’m using as of late summer 2019.

Editor/IDE

I’ve been consistently using Sublime Text (Version 2 because I never got round to upgrading to 3) for years as my code editor, though i’ve never really taken full advantage of the extensions and customisations available. It just works and I saw no real need to change. One thing it has never done well is working over ssh, or rather from a mounted sshfs folder on a mac. I have occasional need to do this, when using the likes of nano in a terminal doesn’t feel good enough, and it’s one thing that I missed from Coda, when I used that for a while, years ago.

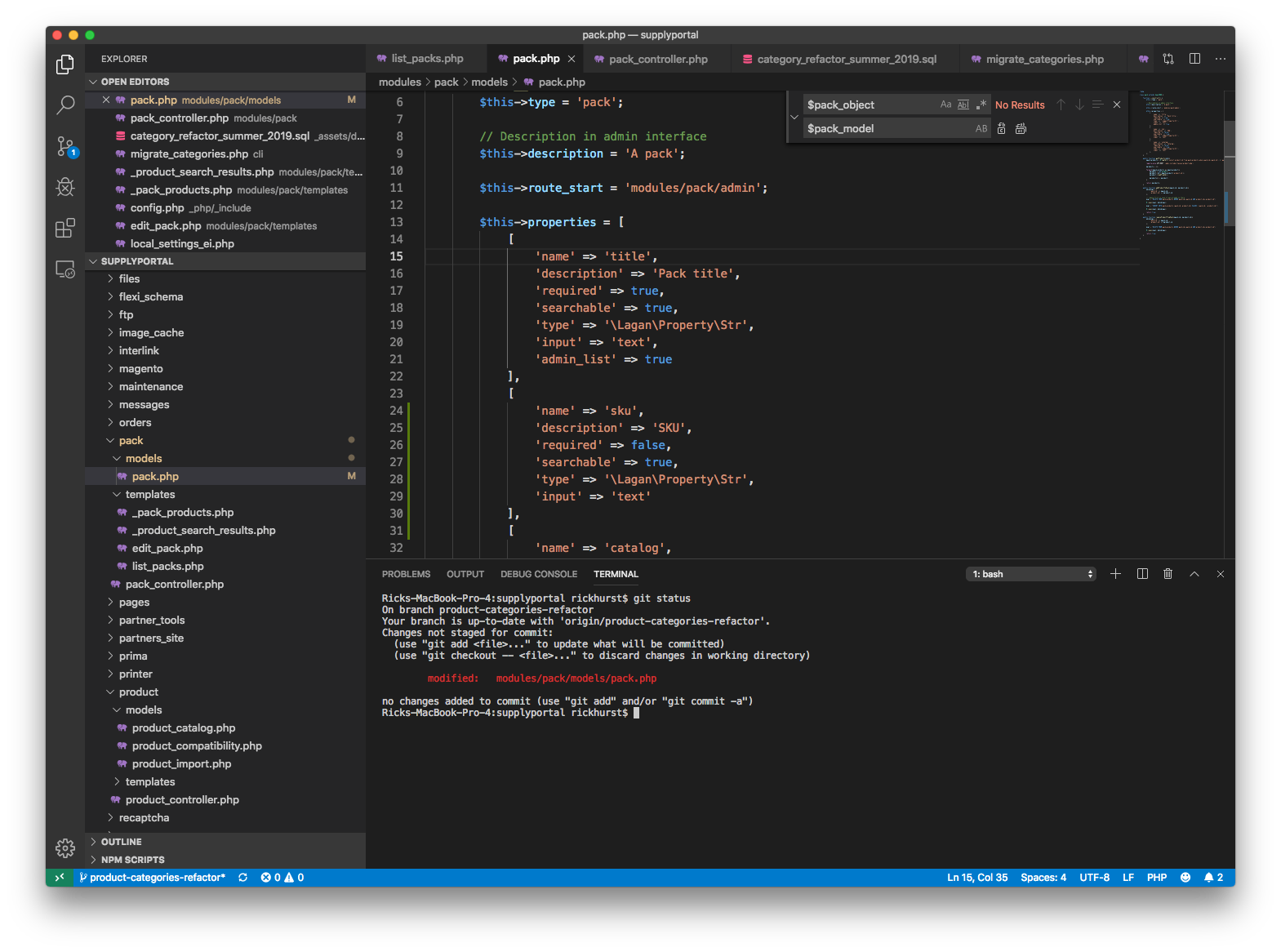

Then after spotting a tweet from an old colleague mentioning remote SSH editing in VS Code (via the Remote – SSH extension), I thought i’d take a look at it. Firstly, having used old-skool Microsoft Visual Studio in the past, I was surprised to see that Microsoft had built and released such a cool free editor, with builds for windows, mac and even linux. It’s already become my default editor – the intellisense and built-in terminal and git functionality is really good. There’s a lot there to explore and a ton of extensions. It also looks good.

Languages and frameworks

Most of my work is currently PHP. I no longer feel the need to apologise for that! PHP 7 is mature, stable and fast. I work on a couple of legacy PHP projects, one of which uses a combination of bespoke MVC code, an old fork of codeigniter (currently being refactored out) and Redbean ORM, and the other uses Zend framework, but is being re-built as a de-coupled app with Lumen (micro-framework by Laravel) on the back end and Vue.js on the front-end.

I haven’t done any web projects with Python recently (though i’m keen to try Pyramid), but I have been using it for a custom FTP server, using pyftpdlib (yes, FTP – for an old-skool EDI project – an old, but still very widely used technology where XML and FTP are used to exchange data – probably predating the invention of JSON and REST). I’ve also been using python for a raspberry pi datalogger project, reading serial data from microcontrollers via USB and then relaying it to a REST API.

JavaScript-wise, I still use bits and pieces of jQuery to get stuff done while I learn Vue.js properly, which is happening as part of a current aforementioned web app rebuild. We settled on Vue.js instead of React because Vue seems to be a popular component in the Lumen/Laravel ecosystem.

For CSS, i’ve settled for now on SASS and Gulp. Sass is a keeper, i’m just using Gulp until I find something that I like better. I tend to use Susy for grid/layout.

Ansible is my go-to tool for devops – I use it to provision web servers and for formerly manual tasks such as migrations of websites, moving content between dev, live and staging on wordpress sites etc. For local development of any sort I almost always use Vagrant now, with an ansible provisioning script, which gives me a very portable set-up, provided I have enough RAM to keep multiple virtual machines running as I juggle projects.

During my last foray back into a day-job (back in 2016), working at an agency, I got a fair amount of experience using wordpress as a CMS, including for some fairly complex projects, relying heavily on the Advanced Custom Fields (ACF) plugin. Now, historically I haven’t always been a fan of WordPress, but it proved to be an excellent tool when used with ACF, making the creation of custom page types incredibly quick and easy compared to other Content Management Systems i’ve used, and even compared to using frameworks which would be my usual preference for custom content. Being able to create custom fields, included nested repeater fields via the WordPress admin, having the field definition saved to the filesystem and then versioned in GIT for seamless syncing to production is a game changer!

Mike 2010-10-27 21:29:18

Maurits van Rees 2010-10-27 21:42:13

Rick 2010-10-27 21:58:26

I agree with you re: transmogrifier which is (partially) why I wrote http://pypi.python.org/pypi/parse2plone

Keep the blogs coming 🙂

Alex Clark 2010-10-28 00:50:01